Explore the critical role of HIPAA compliance in AI verification tools for healthcare, addressing data security, access controls, and breach management.

AI verification tools are transforming healthcare by automating key tasks like patient identity checks and insurance verification. However, handling sensitive patient data requires strict adherence to HIPAA regulations, which safeguard Protected Health Information (PHI). Non-compliance can result in severe penalties, data breaches, and loss of patient trust.

Here’s what you need to know:

Key Takeaway: AI tools like MedOps streamline administrative tasks while embedding HIPAA compliance into every step. From encryption to access controls, compliance is non-negotiable for protecting patient data and maintaining trust.

HIPAA's Privacy, Security, and Breach Notification Rules outline how AI verification tools must handle protected health information (PHI). These regulations create a structured framework that governs every step of PHI management, from access protocols to addressing breaches.

The HIPAA Privacy Rule defines the conditions under which AI systems can access and use PHI. It specifies when patient data can be processed, which can complicate AI operations like model training that may fall outside standard treatment, payment, and healthcare operations (TPO) categories. For instance, using PHI to train AI models often requires explicit patient authorization.

AI systems are also bound by the "minimum necessary" rule, meaning they can only access the least amount of PHI required for their task. For example, an AI tool verifying patient eligibility should not retrieve detailed medical histories. This contrasts with traditional healthcare practices, as AI often relies on large datasets to identify patterns or make predictions.

The HIPAA Security Rule adds another layer of protection, requiring AI tools to implement strong technical, administrative, and physical safeguards to maintain PHI's confidentiality, integrity, and availability.

The rapid adoption of AI in healthcare has brought compliance challenges. By 2024, AI usage among physicians nearly doubled, coinciding with a surge in healthcare data breaches. Cyberattacks often target AI systems handling sensitive data such as names, addresses, and Social Security numbers. For example, in February 2024, Change Healthcare, Inc. reported a breach affecting 190 million individuals - the largest healthcare breach to date. In another case, an AI vendor left 483,000 patient records across six hospitals exposed without proper authorization controls for weeks.

While these safeguards aim to protect PHI, breaches still occur. When they do, a swift response is crucial, as outlined in the next section.

The HIPAA Breach Notification Rule (45 CFR §§ 164.400-414) mandates that covered entities and business associates notify affected parties after a breach of unsecured PHI. For AI verification tools, this means integrating notification protocols into their design and operations.

A breach - defined as any unauthorized use or disclosure of unsecured PHI - requires notification unless a risk assessment determines there is minimal risk of compromise. Notifications are only necessary for breaches involving unsecured PHI, meaning data that has not been encrypted or otherwise rendered unreadable. This makes encryption a key safeguard for AI tools, as properly encrypted data may not require notification even if accessed without authorization.

Business associates managing AI tools must notify covered entities promptly, with a maximum delay of 60 days from discovery. For breaches involving unsecured PHI, notifications must be sent to affected individuals, the media (if over 500 residents of a state are involved), and the Secretary of Health and Human Services within 60 days.

For smaller breaches affecting fewer than 500 individuals, reporting to the Secretary can be done annually, no later than 60 days after the end of the calendar year. Notifications to individuals should include details of the breach, the type of information exposed, steps individuals can take to protect themselves, and measures the organization is taking in response.

To manage these risks, healthcare organizations should update their Business Associate Agreement templates to address AI-specific concerns, such as clear breach notification timelines and permitted uses of PHI in AI systems. Regular HIPAA risk assessments are also vital, especially when introducing new AI technologies that handle PHI.

AI verification tools manage large amounts of Protected Health Information (PHI) while adhering to strict HIPAA regulations. The way these systems store, process, and secure patient data plays a critical role in meeting compliance standards.

AI verification tools typically rely on two main storage setups: cloud-based infrastructure and on-premises solutions. Each option comes with its own set of HIPAA compliance requirements and operational challenges.

Cloud-based systems distribute PHI across multiple servers, governed by detailed business associate agreements that outline data handling protocols. However, these systems face unique challenges when AI models need real-time PHI access for verification tasks, as traditional batch processing methods often fall short in meeting such demands.

On the other hand, on-premises solutions provide healthcare organizations with greater control over PHI storage. This setup requires significant investments in infrastructure, as well as the implementation of security measures, backup systems, and disaster recovery plans. Larger healthcare systems with robust IT infrastructure often prefer this approach to keep sensitive data under their direct management.

Real-time processing adds another layer of complexity. It demands secure and immediate access to PHI, efficient data analysis, and meticulous audit trails, which can strain caching and memory management systems.

To address these challenges, many AI verification tools use hybrid models that separate PHI storage from AI computations. In this setup, sensitive data stays in secure, HIPAA-compliant databases, while AI algorithms work on de-identified or tokenized data. This approach reduces the risk of PHI exposure while maintaining the analytical precision needed for effective verification.

Moreover, organizations using AI verification tools must establish clear policies for data retention and deletion. These practices demonstrate how AI systems balance the need for operational efficiency with strict compliance requirements.

Effective de-identification is a cornerstone of secure AI operations, significantly reducing the risk of PHI exposure. When PHI is properly de-identified in line with HIPAA standards, it no longer falls under the regulation’s strict guidelines, offering AI systems more flexibility in handling and processing data.

HIPAA outlines two primary methods for de-identification:

Tokenization is another widely used technique. It replaces sensitive data - like Social Security numbers - with non-sensitive tokens, enabling verification tasks without exposing the original information. The original data is securely stored in a separate, tightly controlled token vault.

Synthetic data generation is also gaining traction. This method creates artificial datasets that mimic the statistical properties of real PHI without containing actual patient information. While synthetic data isn't subject to HIPAA compliance, creating datasets that are both representative and effective for verification tasks presents technical challenges.

Some tools employ dynamic de-identification, which adjusts the level of data protection based on the specific task. For simpler checks, heavily de-identified data may suffice, while more detailed verification scenarios might require access to additional data elements under stricter controls. This approach demands rigorous access management and clear policies to ensure appropriate use of data.

Another emerging method is differential privacy, which introduces calibrated noise into datasets or query responses. This makes it difficult to determine whether an individual’s data was included, offering strong privacy protections. While still developing in healthcare, differential privacy shows promise for balancing accuracy and privacy in AI systems.

De-identification practices must be continuously monitored and updated. As AI models grow more advanced, they may uncover patterns that could compromise previously secure de-identified data. Regular assessments are essential to ensure these techniques remain effective against evolving threats and advancements in AI capabilities.

The "minimum necessary" standard is all about restricting access to Protected Health Information (PHI) to just what’s needed for a specific task. This becomes tricky when AI verification tools are involved, as they need to process patient data efficiently while still adhering to strict privacy rules.

AI verification systems have to strike a balance between operational efficiency and privacy. Unlike traditional systems where humans manually request specific data, AI tools often require broader access to perform their tasks. This creates a unique challenge: managing access in a way that meets compliance requirements while allowing the AI to function effectively.

To address this, precise access controls are essential. These controls must adapt to the specific needs of each verification scenario. For example, an AI tool checking insurance eligibility might only need demographic and coverage details. On the other hand, a system verifying clinical information may require access to certain medical history data. Each use case demands a tailored approach to access.

Modern AI tools tackle this challenge with contextual access management. This means the system evaluates factors like the task at hand, the user’s role, and the minimum data required before granting access. By doing so, PHI exposure is tightly controlled, ensuring only the necessary information is accessed for each operation.

Another layer of protection is data masking, where sensitive information - like full Social Security numbers - is partially hidden. This allows AI systems to complete their tasks without exposing unnecessary details.

Role-based access controls (RBAC) align perfectly with the minimum necessary standard. They ensure that users or systems only access PHI relevant to their specific role or function. Permissions are assigned based on job responsibilities, limiting unnecessary exposure.

Healthcare organizations typically implement multiple access levels within their AI systems:

AI systems performing cross-functional tasks add complexity. For example, verifying a patient’s identity might require access to demographic, clinical, and financial data. Advanced RBAC systems address this with temporary access extensions - granting expanded permissions for specific tasks and then reverting to baseline access once the task is complete.

Service accounts used by AI tools should operate with the most restrictive permissions possible. Many organizations also use time-based access controls, limiting these accounts to specific hours or operational windows.

Regular audits of access permissions are crucial. Over time, permissions can expand unnecessarily - a phenomenon known as permission creep. Periodic reviews help ensure that access remains aligned with current roles and responsibilities.

For more granular control, some organizations are adopting attribute-based access control (ABAC). ABAC considers additional factors like the sensitivity of the data, the time of access, and the specific context of the verification task. This approach provides more refined control over PHI access in complex scenarios.

Audit trails are essential for demonstrating compliance with HIPAA’s minimum necessary standard. They create a detailed record of PHI access, which is vital for accountability and breach investigations.

An effective audit trail logs key details: who accessed what data, when, why, and from where. For AI systems, this includes the specific task being performed, the data elements accessed, and the justification for the access.

Real-time monitoring adds another layer of oversight. These systems analyze logs as they’re generated, flagging unusual patterns like excessive access requests or data retrieval outside normal hours. For example, if an AI system starts requesting data it doesn’t typically need, the system can send an alert.

Automated alerts are particularly important for AI systems handling large volumes of PHI. Alerts can be configured for high-risk scenarios, such as bulk data exports or access to records by users outside their usual scope. These alerts enable quick responses to potential violations.

Behavioral analytics take monitoring a step further by learning normal access patterns for both human users and AI systems. If activity deviates significantly from these patterns, the system flags it for investigation. This helps identify both malicious actions and system misconfigurations that could expose PHI unnecessarily.

Maintaining data integrity in audit logs is critical. Logs must be securely stored, protected from tampering, and regularly backed up. Many organizations use centralized logging systems to consolidate data from multiple AI tools, giving them a complete view of PHI access across the board.

Finally, retention policies for audit logs must strike a balance between compliance and storage costs. While HIPAA doesn’t specify exact retention periods, most organizations keep logs for at least six years to align with other healthcare record requirements. Given the volume of logs generated by AI systems, careful planning is needed to manage long-term storage and retrieval.

Encryption serves as the ultimate safeguard for Protected Health Information (PHI) within AI verification systems, ensuring that any unauthorized access renders the data unreadable.

These tools process vast amounts of sensitive health data, making them prime targets for cybercriminals. This reality highlights the need for strong encryption - not just to meet compliance standards but as a necessary step to protect both patients and businesses.

However, achieving a balance between fast data processing and stringent security measures can be tricky. Modern systems often rely on hardware-accelerated encryption and advanced algorithms to reduce performance slowdowns while maintaining high security standards.

Encryption covers two key states of data: data at rest (e.g., stored patient records, training datasets, and backups) and data in transit (data moving between healthcare systems and internal components). Each state requires tailored encryption methods. This separation of encryption standards also lays the groundwork for addressing the unique challenges of performing computations on secured data.

The difficulty grows when AI systems need to process encrypted data. Traditional approaches often require decryption before computation, which can open the door to vulnerabilities. Advanced techniques like homomorphic encryption allow some computations to take place on encrypted data without the need for decryption. While promising, these methods are still being refined for practical use in healthcare.

To comply with HIPAA regulations, AI verification tools must implement strong encryption measures. While HIPAA doesn’t specify exact algorithms, it requires safeguards to protect data. Many systems follow the National Institute of Standards and Technology (NIST) guidelines, with the Advanced Encryption Standard (AES) using 256-bit keys being a popular choice for securing sensitive healthcare information.

For data at rest, AES-256 encryption is commonly applied through various methods, including database-level encryption, file system encryption, or application-level encryption. Each approach has its own trade-offs in terms of security, performance, and complexity.

For data in transit, Transport Layer Security (TLS) 1.3 has become the standard. With faster connection handshakes and stronger encryption protocols, TLS 1.3 ensures PHI remains secure as it moves between systems.

Additional protections include Perfect Forward Secrecy (PFS), which ensures that even if long-term keys are compromised, past communications remain secure. Digital certificates with pinning and regular rotation further defend against man-in-the-middle attacks.

Key management is another critical piece of the puzzle. Hardware Security Modules (HSMs) provide tamper-resistant environments for generating, storing, and managing encryption keys, ensuring they remain secure - even from system administrators. Many platforms use envelope encryption, where data encryption keys (DEKs) are themselves encrypted with key encryption keys (KEKs). This setup simplifies key rotation and enhances security.

Following encryption best practices is essential for keeping AI verification tools aligned with HIPAA requirements. End-to-end encryption should be the default to protect PHI throughout its entire journey, from collection to processing.

Key management processes should include regular, automated rotations, and sensitive fields - such as Social Security numbers and medical record numbers - must be encrypted for added protection, leaving less critical data accessible for processing.

Zero-knowledge architectures, where AI platforms never have access to unencrypted PHI, are another effective strategy. Regular encryption audits can help identify weaknesses in both encryption and key management setups.

Encrypting backup data is equally important. AI systems generate substantial amounts of backup data, including model snapshots and historical records. These backups should follow the same encryption standards as production systems, with separate key management to avoid single points of failure.

When AI tools are accessed through mobile devices, full-device encryption becomes critical. Mobile Device Management (MDM) solutions can enforce encryption policies and enable remote data wiping if devices are lost or stolen.

Balancing security and efficiency often requires optimization, such as using hardware-accelerated encryption or specialized software libraries. Selective encryption, where only the most sensitive data is encrypted, can also maintain system responsiveness.

Disaster recovery plans must account for encryption key availability. Losing or corrupting keys can render encrypted PHI permanently inaccessible. Secure offsite storage of keys and regular testing of backup and recovery procedures are crucial to ensure continuity.

Finally, staff training is essential. IT teams must be well-versed in encryption practices, capable of identifying potential issues, and prepared to act swiftly in case of security incidents. As threats and technologies evolve, maintaining a knowledgeable team is key to staying protected.

As mentioned earlier, strong encryption and access controls play a significant role in reducing risks. However, non-compliance with HIPAA can still lead to costly consequences. When AI verification tools fail to meet HIPAA standards, the repercussions can include financial penalties, legal challenges, and damage to reputation. The use of AI adds another layer of complexity in managing Protected Health Information (PHI), making robust security measures and strict compliance protocols more crucial than ever. The ongoing enforcement actions by the Office for Civil Rights (OCR) underscore the importance of proactive risk management. To strengthen these efforts, it’s essential to understand the common scenarios that lead to HIPAA violations.

AI verification tools can be vulnerable to several common HIPAA violations, including:

HIPAA violations are met with penalties that scale based on the severity of the breach. Penalties can range from moderate fines for accidental violations to steep fines and even criminal charges for cases of willful neglect. These consequences often result in operational disruptions, higher insurance premiums, and lasting damage to an organization’s reputation.

This is why every layer of HIPAA compliance - whether it’s encryption, audit trails, or vendor agreements - is so critical. The potential fallout from non-compliance has led many healthcare organizations to invest heavily in comprehensive HIPAA compliance programs, especially as AI verification tools become more integrated into their operations.

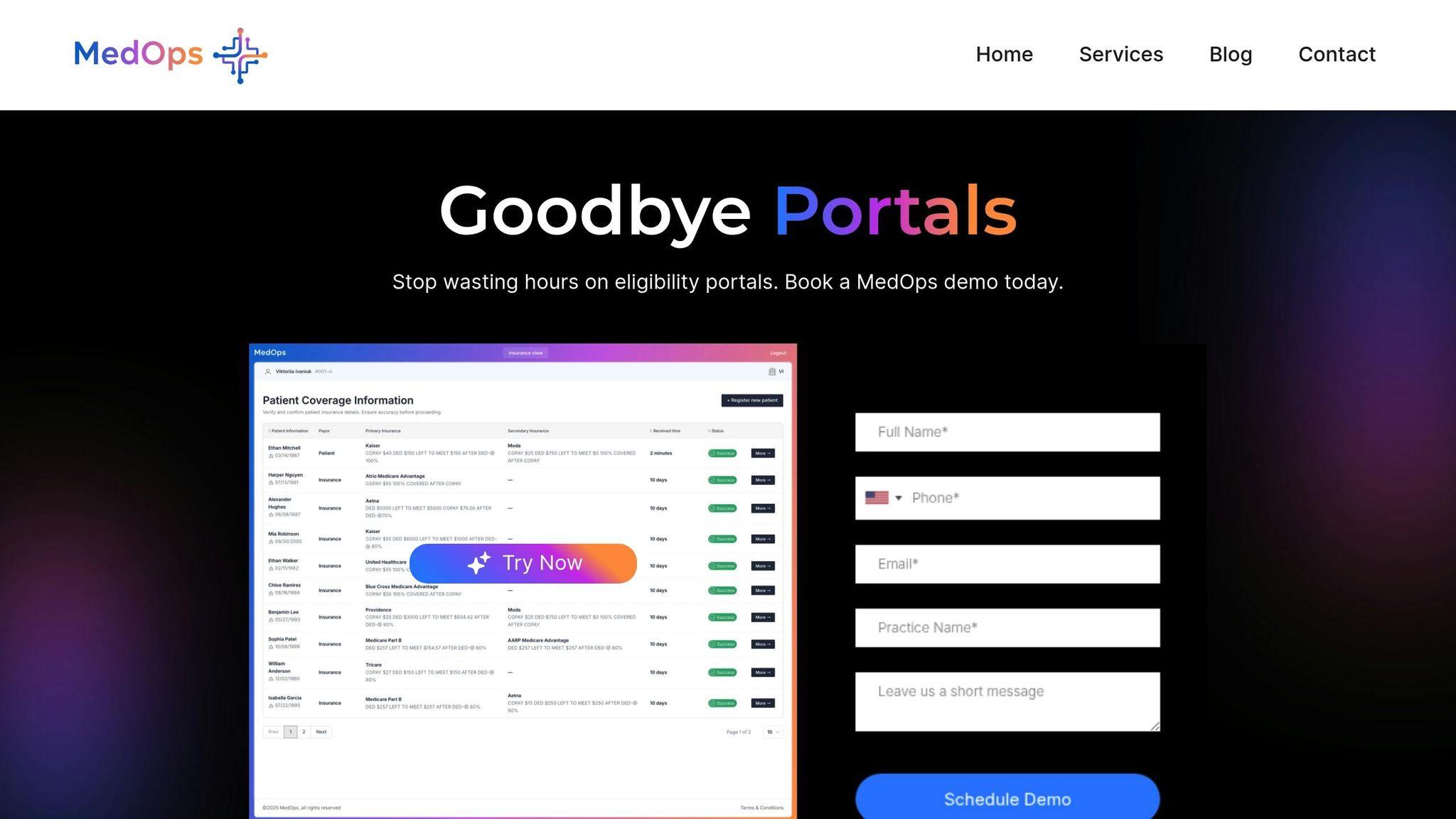

Healthcare organizations face the challenge of balancing operational efficiency with strict HIPAA compliance. MedOps steps in as an AI-powered solution designed to safeguard patient data while simplifying critical administrative tasks. Below, we’ll explore the platform’s standout features and how it enhances both operations and patient care.

MedOps takes the hassle out of insurance verification by automating the process. It connects directly to insurance databases for real-time validation of coverage, copayments, deductibles, and authorization requirements. This means less manual work and smoother patient eligibility checks. Plus, it integrates effortlessly with existing systems like EHRs, practice management tools, and billing platforms, making it easy to incorporate into your current workflow.

Whether it’s routine eligibility checks or handling more complex authorization tasks, MedOps is built to adapt. Healthcare providers can improve their processes without needing to overhaul their technology. And every feature is backed by robust HIPAA controls, ensuring compliance at every step.

By automating insurance verification, MedOps helps reduce check-in wait times, giving administrative staff more time to focus on patients. Its real-time capabilities catch coverage issues early, allowing providers to have upfront conversations about payment options and care plans. This not only reduces financial stress for patients but also strengthens their trust in the provider.

Beyond improving the patient experience, MedOps enhances revenue cycles by ensuring claims are processed accurately from the start. This reliability means fewer resources spent on fixing errors and more opportunities to reinvest in clinical care. And with its strong security measures, healthcare providers can enjoy these efficiency gains without compromising patient privacy.

Healthcare organizations face the dual challenge of meeting HIPAA requirements while leveraging AI to streamline operations. To achieve this, they must implement technical and administrative safeguards that complement their existing infrastructure.

Key measures include role-based access controls, detailed audit trails, and AES-256 encryption to secure protected health information (PHI) both at rest and during transmission. Additionally, practices like de-identification and anonymization help lower compliance risks by limiting exposure to identifiable patient data - an especially important step as AI models continue to evolve.

Failing to comply with HIPAA can lead to hefty fines, damage to reputation, and even criminal charges.

MedOps addresses these challenges head-on by embedding HIPAA compliance into its AI-driven insurance verification platform. This tool automates essential administrative tasks while strictly adhering to privacy and security standards. By integrating seamlessly with existing electronic health record (EHR) systems and practice management software, MedOps ensures these compliance measures are not just theoretical but actively support both improved workflow efficiency and patient care - without compromising data security.

For healthcare organizations exploring AI verification tools, compliance should be a built-in feature, not an afterthought. Platforms like MedOps show that it's possible to achieve operational efficiency and regulatory compliance simultaneously, laying a strong foundation for growth while safeguarding patient privacy at every step.

AI verification tools align with HIPAA regulations by implementing strong security protocols to safeguard sensitive patient information. These tools use encryption to protect protected health information (PHI) both when it's stored and during transmission, ensuring the data stays secure throughout its lifecycle. Access is tightly controlled, restricted to authorized personnel, and follows the minimum necessary standard, meaning only the required data is used for specific tasks.

Moreover, these AI systems function within secure, HIPAA-compliant environments, adhering to strict procedures designed to prevent unauthorized access, data breaches, or misuse. Through these measures, AI verification tools enable healthcare organizations to uphold compliance and ensure patient privacy remains protected.

Failing to meet HIPAA regulations in AI-driven healthcare systems can pose serious risks. These include data breaches, unauthorized access to sensitive patient information, and weakened system security. Such incidents can severely impact patient privacy and erode trust in the healthcare provider.

The fallout from non-compliance can be harsh: steep fines, legal challenges, and damaged reputations. For healthcare organizations, this can mean disrupted operations and a loss of confidence from both patients and partners. Prioritizing strong compliance measures is critical to protect patient data and uphold both legal and ethical responsibilities.

Protecting patient data is a top priority in AI-driven healthcare systems, and encryption and de-identification play critical roles in ensuring this protection.

Encryption works by transforming sensitive information into a coded format that can only be unlocked with a specific decryption key. This process safeguards data both when it's stored and while it's being transmitted, making it far less vulnerable to unauthorized access.

On the other hand, de-identification strips away personal identifiers like names, Social Security numbers, and medical record numbers. By removing these details, the data becomes untraceable to specific individuals, enabling its use in analysis without compromising patient privacy.

When used together, these methods not only help meet HIPAA requirements but also significantly reduce the risk of data breaches or unauthorized access in AI-powered healthcare tools. They create a secure foundation for leveraging sensitive information responsibly.